An Image Generation Method for Automated Driving Based on Improved GAN

-

摘要:

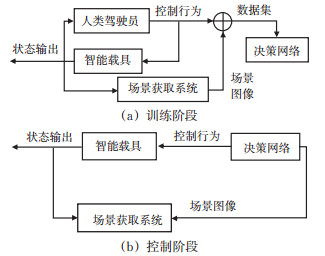

基于端到端数据系统的自动驾驶系统对驾驶图像存在巨大需求。为解决一般生成式对抗网络模型在扩充驾驶图像数据集时不稳定及生成图像特征缺乏多样性的问题,研究1种改进网络模型LS-InfoGAN。结合最小二乘对抗损失防止模型梯度消失,并缓解生成器优化矛盾,提升模型训练稳定性。通过最大化生成图像与真实图像间的互信息提升生成器特征学习能力,改善生成图像特征多样性。利用转置卷积层还原图像特征,提升生成图像特征清晰度。以自主构建的模拟驾驶场景中获取的带标签驾驶图像集对模型有效性及其数据集扩充应用效果进行验证。实验分析表明:相比改进前模型,LS-InfoGAN模型的图像生成过程稳定性平均提升35%;使用此模型扩充的数据集进行端到端自动驾驶系统中决策网络的训练能在不采集新图像的情况下将系统决策性能提升1%~2%;建议使用此模型扩充图像数据集时将生成图像数量设置为原始训练集图像数量的1~2倍。

Abstract:There is a huge demand for driving images in the automated driving systems based on end-to-end data system. In order to solve the instability of general generative adversarial network model and the lack of diversity of generated image features when expanding the driving image data set, this work proposed an improved network model, LS-InfoGAN. The least-squares loss is used to prevent the model gradient from disappearing and alleviate the contradiction in the generator during optimization, thereby improving stability of the model. The learning ability of the generator is improved by maximizing mutual information between generated images and actual images, thus improving the diversity of its features. The transposed convolutional layer to restore the image features is used to improve the clarity of the generated image features. The effectiveness and application performance of the model are verified with a labeled image dataset acquired in self-built driving scenes. According to the academic analysis in this study, compared with the model before the improvement, the stability of the image generation process of the LS-InfoGAN model is improved by an average of 35%。Besides, when used for training in the decision network of end-to-end self-driving systems, the augmented dataset can improve the decision performance by 1% to 2% without acquiring new images. The recommended number of generated images is 1 to 2 times the number of original images when the model is used to augment the dataset.

-

表 1 训练集和测试集组成

Table 1. Configuration of the training and testing dataset

数据集 来源 数量 左转 直行 右转 训练集 80%原始图像 2 607 3 152 2 606 测试集 20%原始图像 652 788 652 表 2 图像生成训练结果

Table 2. Results of image-production training

数据集 成功次数 失败次数 成功率/% CGAN 11 19 36.67 ACGAN 19 11 63.33 InfoGAN 16 14 53.33 LS-InfoGAN 26 4 86.67 表 3 分类评估结果

Table 3. Assessment results of classifications

扩充方法 类别 正确率(标准差)/% 查准率 召回率 无 直行 89.9(1.9) 90.8(4.0) 右转 90.6(2.9) 89.0(2.7) 左转 84.0(4.1) 84.1(4.0) 综合 88.2(1.1) 88.0(1.5) LS-InfoGAN 直行 91.2(1.3) 93.5(1.2) 右转 90.4(3.2) 91.3(2.0) 左转 87.6(1.9) 84.8(4.1) 综合 89.7(1.3) 89.9(1.1) InfoGAN 直行 90.7(2.9) 92.6(3.0) 右转 90.1(3.5) 91.1(2.3) 左转 86.9(4.6) 85.3(3.6) 综合 89.3(0.7) 89.7(0.7) CGAN 直行 91.2(1.5) 90.4(2.2) 右转 88.1(2.8) 89.2(4.1) 左转 83.6(2.5) 83.1(3.2) 综合 87.7(0.9) 87.6(0.9) ACGAN 直行 92.0(3.7) 89.8(4.4) 右转 89.3(2.0) 91.2(2.4) 左转 83.9(4.2) 84.4(3.5) 综合 88.4(1.4) 88.5(1.4) 表 4 不同数量生成图像对比评估结果

Table 4. Assessment results of classifications with different numbers of generating images

扩充数量 类别 正确率(标准差)/% 查准率 召回率 8 700 直行 91.2(1.3) 93.5(1.2) 右转 90.4(3.2) 91.3(2.0) 左转 87.6(1.9) 84.8(4.1) 综合 89.7(1.3) 89.9(1.1) 17 400 直行 92.3(1.2) 93.1(1.5) 右转 91.5(2.3) 91.2(2.5) 左转 87.4(2.1) 86.7(2.5) 综合 90.4(0.7) 90.4(0.8) 26 100 直行 91.2(1.2) 93.4(1.4) 右转 91.3(1.5) 90.1(3.6) 左转 86.9(2.9) 85.9(1.9) 综合 89.8(0.7) 89.8(1.0) -

[1] SCHWARTINGW, ALONSO-MORAJ, RUS D. Planning and decision-making for autonomous vehicles[J]. Annual Review of Control, Robotics, and Autonomous Systems, 2018, 1(1): 187-210. doi: 10.1146/annurev-control-060117-105157 [2] WANG Q, CHEN L, TIAN B, et al. End-to-end autonomous driving: An angle branched network approach[J]. IEEE Transactions on Vehicular Technology, 2019, 68(12): 11599-11610. doi: 10.1109/TVT.2019.2921918 [3] DOORAKIA R, LEED J. An end-to-end deep reinforcement learning-based intelligent agent capable of autonomous exploration in unknown environments[J]. Sensors, 2018, 18(10): 3575. [4] SHI H B, SHI L, XU M, et al. End-to-end navigation strategy with deep reinforcement learning for mobile robots[J]. IEEE Transactions on Industrial Informatics, 2020, 16(4): 2393-2402. doi: 10.1109/TII.2019.2936167 [5] LECUN Y, COSATTO E, BEN J, et al. Dave: autonomous off-road vehicle control using end-to-end learning. Technical Report DARPA-IPTO Final Report[R]. New York: Courant Institute, 2004. [6] CHEN Y C, SEFF A, KORNHAUSER A, et al. Deepdriving: learning affordance for direct perception in autonomous driving[C]. 15th International Conference on Computer Vision(IC-CV), Santiago: IEEE, 2015. [7] KINGMA P D, WELLING M. Auto-encoding variational bayes[C]. 2nd International Conference on Learning Representations (ICLR), Banff, Canda: IEEE, 2014. [8] GOODFELLOW I J, POUGET-ABADIE J, MIRZA M, et al. Generative adversarial nets[C]. 27th International Conference on Neural Information Processing Systems(NIPS), Cambridge, USA: IEEE, 2014. [9] 罗会兰, 敖阳, 袁璞. 一种生成对抗网络用于图像修复的方法[J]. 电子学报, 2020, 48(10): 1891-1898. https://www.cnki.com.cn/Article/CJFDTOTAL-DZXU202010003.htmLUO Huilan, AO Yang, YUAN Pu. Image inpainting using generative adversarial networks[J]. Acta Electronica Sinica, 2020, 48(10): 1891-1898. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-DZXU202010003.htm [10] 曹锦纲, 李金华, 郑顾平. 基于生成式对抗网络的道路交通模糊图像增强[J]. 智能系统学报, 2020, 15(3): 491-498. https://www.cnki.com.cn/Article/CJFDTOTAL-ZNXT202003013.htmCAO Jinggang, LI Jinhua, ZHENG Guping. Enhancement of blurred road-traffic images based on generative adversarial network[J]. CAAI Transactions on Intelligent Systems, 2020, 15(3): 491-498. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-ZNXT202003013.htm [11] RADFORD A, METZL, CHINTALAS. Unsupervised representation learning with deep convolutional generative adversarial networks[C]. 4th International Conference on Learning Representations(ICLR), San Juan, Puerto Rico: IEEE, 2016. [12] MAO X D, LI Q, XIE H R, et al. Least squares generative adversarial networks[C]. 16th International Conference on Computer Vision(ICCV), Venice, Italy: IEEE, 2017. [13] ARJOVSKY M, CHINTALA S, BOTTOUL. Wasserstein gan[C]. 34th International Conference on MeachineLearning (ICML), Sydney, Austrilia: IEEE, 2017. [14] GULRAJANⅡ, AHMEDF, ARJOVSKYM, et al. Improved training of wasserstein gans[C]. 31th International Conference on Neural Information Processing Systems(NIPS), Long Beach, USA: IEEE, 2017. [15] MIRZA M, OSINDEROS. Conditional generative adversarial nets[J/OL]. (2014-11-6)[2021-3-24]. https://arxiv.org/abs/1411.1784. [16] CHEN X, DUAN Y, HOUTHOOFTR, et al. InfoGAN: interpretable representation learning by information maximizing generative adversarial nets[C]. 30th International Conference on Neural Information Processing Systems(NIPS), Barcelona, Spain: IEEE, 2016. [17] ODENA A, OLAH C, SHLENS J. Conditional image synthesis with auxiliary classifier gans[C]. 34th International Conference on Meachine Learning (ICML), Sydney, Austrilia: IEEE, 2017. [18] 王力, 李敏, 闫佳庆, 等. 基于生成式对抗网络的路网交通流数据补全方法[J]. 交通运输系统工程与信息, 2018, 18(6): 63-71. https://www.cnki.com.cn/Article/CJFDTOTAL-YSXT201806010.htmWANG Li, LI Min, YAN Jiaqing, et al. Urban traffic flow data recovery method based on generative adversarial network[J]. Journal of Transportation Systems Engineering and Information Technology, 2018, 18(6): 63-71. (in Chinese) https://www.cnki.com.cn/Article/CJFDTOTAL-YSXT201806010.htm [19] 王凯. 基于生成式对抗网络(GAN)的自动驾驶容错感知研究[D]. 杭州: 浙江大学, 2018.WANG Kai. Study on fault-tolerant sensing of automatic driving system based on generative adversarial nets[D]. Hangzhou: Zhejiang University, 2018. (in Chinese) [20] CHEN L, HU X M, TANG B, et al. Parallel motion planning: learning a deep planning model against emergencies[J]. IEEE Intelligent Transportation System Magazine, 2019, 11(1): 36-41. doi: 10.1109/MITS.2018.2884515 [21] HOWARD A, SANDLER M, CHU G, et al. Searching for MobileNetV3[C]. 18th International Conference on Computer Vision(ICCV), Seoul, Korea: IEEE, 2019. -

下载:

下载: